Saturday, December 27, 2014

ITPalooza Kanban and Continuous Delivery presentation

This was definitely a great event to share Kanban and Continuous delivery experiences with other agile practitioners in South Florida. I thank IT Palooza and the South Florida Agile Association for the opportunity.

Friday, December 26, 2014

On security: news without validation - The case of ntpd for MAC OSX

It is a shame that even Hacker News reported as many many others inaccurate information about the recent several NTP vulnerabilties affecting the ntpd daemon in *NIX systems.

Apple computers are not patched automatically if the users do not select to do so, a feature that was added with Yosemite so most likely not even available in many MACs in use out there.

Sysadmins should be encouraged to reach out their user base so the MACs are patched. As a difference with Ubuntu and other linux distros where most likely ntpdate is being used to synchronize time in MACs the ntpd daemon is used. Yes, this is not just a server issue when it comes to MAC OS-X.

BTW back to ntpd vulnerabilities. Follow apple instructions for correct remediation. As explained there 'what /usr/sbin/ntpd' should be run to check the proprietary OSX ntpd version.

What is interesting here is that 'ntpd --version' still returns 4.2.6 after the patch which according to the official ntpd distribution communication does not contain the patch. Version 4.2.8 does.

Apple computers are not patched automatically if the users do not select to do so, a feature that was added with Yosemite so most likely not even available in many MACs in use out there.

Sysadmins should be encouraged to reach out their user base so the MACs are patched. As a difference with Ubuntu and other linux distros where most likely ntpdate is being used to synchronize time in MACs the ntpd daemon is used. Yes, this is not just a server issue when it comes to MAC OS-X.

BTW back to ntpd vulnerabilities. Follow apple instructions for correct remediation. As explained there 'what /usr/sbin/ntpd' should be run to check the proprietary OSX ntpd version.

What is interesting here is that 'ntpd --version' still returns 4.2.6 after the patch which according to the official ntpd distribution communication does not contain the patch. Version 4.2.8 does.

Thursday, December 25, 2014

Do you practice careful and serious reading?

Do you practice careful and serious reading? This is the ultimate question I have come to ask when someone claims to have read the book and clearly I find out s(he) meant to say "eyes went" instead of "mind went" through the content of the book. There is a difference between "becoming familiar" and "digesting" a topic.

When you carefully read a book you take notes. I personally do not like to highlight books as the highlighters I have seen so far as of December 2014 will literally ruin the book. I think taking notes not only help with deep understanding of the content but ultimately it becomes a great summary for further "quick reference".

When you seriously read a book you know what you disagree and agree upon, you are not in a sofa distracted by familiar sounds. You are in a quite space, fully concentrated in receiving a message, processing the message and coming up with your own conclusions, questions and ultimately must importantly answers to the unknown which now suddenly becomes part of your personal wisdom.

It is discouraging to sustain a debate around a book content when there is not careful and serious reading. In my opinion "reading" when it comes to a specific subject matter means "studying" and of course you can only claim you have studied a subject if you have carefully and seriously read the related material. Seeing a book is not the same as looking into a book. Listening to an audio book content is not the same as hearing it.

When you carefully read a book you take notes. I personally do not like to highlight books as the highlighters I have seen so far as of December 2014 will literally ruin the book. I think taking notes not only help with deep understanding of the content but ultimately it becomes a great summary for further "quick reference".

When you seriously read a book you know what you disagree and agree upon, you are not in a sofa distracted by familiar sounds. You are in a quite space, fully concentrated in receiving a message, processing the message and coming up with your own conclusions, questions and ultimately must importantly answers to the unknown which now suddenly becomes part of your personal wisdom.

It is discouraging to sustain a debate around a book content when there is not careful and serious reading. In my opinion "reading" when it comes to a specific subject matter means "studying" and of course you can only claim you have studied a subject if you have carefully and seriously read the related material. Seeing a book is not the same as looking into a book. Listening to an audio book content is not the same as hearing it.

Thursday, December 18, 2014

How to SVN diff local agaist newer revision of item

From command line I would expect that a simple 'svn diff local/path/to/resource' will provide differences between local copy and subversion server copy. However that is not a case as a special '-r HEAD' needs to be added to the command instead. Here is how to add an alias for 'svndiff' so that you can get the differences:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # Omit the path or use just "." to get full current directory differences | |

| svn diff -r HEAD local/path/to/resource | |

| # So it makes sense to use an alias for example in ~/.bash_profile in a MAC you could add: | |

| alias svndiff="svn diff -r HEAD" |

Tuesday, December 16, 2014

NodeJS https to https proxy for transitions to Single Page applications like AngularJS SPA

If you are working on a migration from classical web sites to Single Page Applications (SPA) you will find yourself dealing with a domain where all the code lives, mixed technologies for which you are forced to run the backend server and bunch of inconveniences like applying database migrations or redeploying backend code.

You should be able to develop the SPA locally though and consume the APIs remotely but you probably do not want to allow cross domain requests or even separate the application in two different domains.

A reverse proxy should help you big time. With a reverse proxy you can concentrate on just developing your SPA bits locally while hitting the existing API remotely and yet being able to remotely deploy the app when ready. All you need to do is detect where the SPA is running and route through the local proxy the API requests.

Node http-proxy can be used to create an https-to-https proxy as presented below:

You should be able to develop the SPA locally though and consume the APIs remotely but you probably do not want to allow cross domain requests or even separate the application in two different domains.

A reverse proxy should help you big time. With a reverse proxy you can concentrate on just developing your SPA bits locally while hitting the existing API remotely and yet being able to remotely deploy the app when ready. All you need to do is detect where the SPA is running and route through the local proxy the API requests.

Node http-proxy can be used to create an https-to-https proxy as presented below:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| /* | |

| - https-to-https-proxy.js: Tested with Apache as target host | |

| - Preconditions: Have key/cert generated as in: | |

| openssl genrsa -out key.pem 1024 | |

| openssl req -new -key key.pem -out cert.csr | |

| openssl x509 -req -days 3650 -in cert.csr -signkey key.pem -out cert.pem | |

| */ | |

| var proxyTargetHost = 'test.sample.com', | |

| proxyTargetPort = 443, | |

| proxyLocalHost = 'localhost', | |

| proxyLocalPort = 8443, | |

| sslKeyPath = 'key.pem', | |

| sslCertPath = 'cert.pem', | |

| https = require('https'), | |

| httpProxy = require('http-proxy'), | |

| fs = require('fs'); | |

| var proxy = httpProxy.createServer({ | |

| ssl: { | |

| key: fs.readFileSync(sslKeyPath, 'utf8'), | |

| cert: fs.readFileSync(sslCertPath, 'utf8') | |

| }, | |

| target: 'https://' + proxyTargetHost + ':' + proxyTargetPort, | |

| secure: false, // Depends on your needs, could be false. | |

| rejectUnauthorized: false, | |

| hostRewrite: proxyLocalHost + ':' + proxyLocalPort | |

| }).listen(proxyLocalPort); | |

| proxy.on('proxyReq', function(proxyReq, req, res, options) { | |

| proxyReq.setHeader('Host', proxyTargetHost); | |

| }); | |

| proxy.on('proxyRes', function (proxyRes, req, res) { | |

| res.setHeader("Access-Control-Allow-Origin", "*"); | |

| res.setHeader("Access-Control-Allow-Headers", "X-Requested-With"); | |

| res.setHeader("Access-Control-Allow-Credentials", "true"); | |

| }); |

Wednesday, December 10, 2014

Adding ppid to ps aux

The usual way BSD style ps command is used does not return the parent process id (ppid). To add it you need to use the "o" option as follows:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| ps aux|head -1 | |

| # Returns: USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND | |

| ps axo user,pid,ppid,pcpu,pmem,vsz,rss,tname,stat,start,time,args|head -1 | |

| # Returns: USER PID PPID %CPU %MEM VSZ RSS TTY STAT STARTED TIME COMMAND |

Tuesday, December 09, 2014

Is your bank or favorite online shop insecure? You are entitled to act as a conscious user

UPDATE: A+ should be your target now.

Is your bank of favorite online shop insecure? You are entitled to act as a conscious user. How?

The first thing any company out there should do with their systems is to make sure that traffic between the customer and the service provider is strongly encrypted. All you need to do is to visit this SSL Server Test, insert the URL for the site and expect the results.

If you do not get an A (right now *everybody* is vulnerable to latest Poodle strike so expect to see a B as the best case scenario) you should be concerned. If you get a C or lower please immediately contact the service provider demanding they correct their encryption problems.

Be specially wary of those who have eliminated their websites from SSL Labs. Security *just* by Obscurity does not work!!!

Is your bank of favorite online shop insecure? You are entitled to act as a conscious user. How?

The first thing any company out there should do with their systems is to make sure that traffic between the customer and the service provider is strongly encrypted. All you need to do is to visit this SSL Server Test, insert the URL for the site and expect the results.

If you do not get an A (right now *everybody* is vulnerable to latest Poodle strike so expect to see a B as the best case scenario) you should be concerned. If you get a C or lower please immediately contact the service provider demanding they correct their encryption problems.

Be specially wary of those who have eliminated their websites from SSL Labs. Security *just* by Obscurity does not work!!!

Monday, December 08, 2014

Libreoffice and default Microsoft Excel 1900 Date System

The custom date in format m/d/yy is not formatted in libreoffice but instead a number is shown. This number corresponds to the serial day starting at January, 1 1900. So 5 will correspond to 1905. But there is a leap year bug for which a correction needs to be made (if (serialNumber > 59) serialNumber -= 1) as you can see in action in this runnable.

So if you convert excel to CSV for example and you get a number instead of an expected date, go to that Excel file from the libreoffice GUI and convert a cell to Date to see if the output makes sense as a date. At that point, convinced that those are indeed dates all you need to do is apply the algorithm to the numbers to convert them to dates in the resulting CSV.

So if you convert excel to CSV for example and you get a number instead of an expected date, go to that Excel file from the libreoffice GUI and convert a cell to Date to see if the output makes sense as a date. At that point, convinced that those are indeed dates all you need to do is apply the algorithm to the numbers to convert them to dates in the resulting CSV.

Sunday, December 07, 2014

On Strict-Transport-Security: Protecting your app starts by protecting your users

Protecting your app starts by protecting your users. There are several HTTP headers you should already be using in your web apps but one usually overlooked is Strict-Transport-Security

This header ensures that the browser will refuse to connect if there is a certificate problem like in an invalid certificate presented by a MIM attack coming from a malware in a user's computer. Without this header the user will be giving away absolutely all "secure" traffic to the attacker. Additionally this header will make sure the browser uses only https protocol which means no insecure/unencrypted/plain text communication happens with the server.

The motivation for not using this header could be to allow mixing insecure content in your pages or to allow using self signed certificates in non production servers. I believe such motivation is dangerous when you consider the risk. Your application will be more secure if you address security in the backend and in the front end, the same way you should do validations in the front end and the backend.

This header ensures that the browser will refuse to connect if there is a certificate problem like in an invalid certificate presented by a MIM attack coming from a malware in a user's computer. Without this header the user will be giving away absolutely all "secure" traffic to the attacker. Additionally this header will make sure the browser uses only https protocol which means no insecure/unencrypted/plain text communication happens with the server.

The motivation for not using this header could be to allow mixing insecure content in your pages or to allow using self signed certificates in non production servers. I believe such motivation is dangerous when you consider the risk. Your application will be more secure if you address security in the backend and in the front end, the same way you should do validations in the front end and the backend.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Header unset ETag | |

| Header set X-Frame-Options: sameorigin | |

| Header set X-XSS-Protection: "1; mode=block" | |

| Header set X-Content-Type-Options: nosniff | |

| Header set X-WebKit-CSP: "default-src 'self'" | |

| Header set X-Permitted-Cross-Domain-Policies: "master-only" | |

| Header set Strict-Transport-Security "max-age=31536000; includeSubDomains" |

Friday, December 05, 2014

On risk management: Do you practice Continuous Web Application Security?

Do you practice Continuous Web Application Security? Continuously delivering software should include security. Just like with backup-restore tests this is a a very important topic, usually overlooked.

Here is an affordable practical proposal for continuous web application security:

Congratulations. You have just added continuous web application security to your SDLC.

Here is an affordable practical proposal for continuous web application security:

- Have a Ubuntu Desktop (I personally like to see what is going on when it comes to the UI related testing) with your end to end (E2E) platform of choice running on it.

- From your continous integration (CI) of choice hit (remotely) a local runner that triggers your automated E2E test suite against your application URL. I strongly believe that E2E concerns belong to whoever is in charge of developing the UX/UI.

- E2E tests should open your browse of preference and you should be able to *see* what is going on in the browser.

- A proxy based passive scanner like zaproxy should be installed. Below is how to install it from sources:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters. Learn more about bidirectional Unicode characters

TODAY=`date +%Y-%m-%d` INSTALL_DIR=~/ cd $INSTALL_DIR git clone https://github.com/zaproxy/zaproxy.git cd zaproxy/ ./gradlew :zap:distDaily cd zap/build/distributions/ unzip ZAP_D-${TODAY}.zip cd ZAP_D-${TODAY}/ ## Manual steps # Start the GUI to install all addons # ./zap.sh -addoninstallall -addonupdate # Close the GUI and remove the HUD addon because otherwise it will interfere with tests # ./zap.sh -addonuninstall hud # Close the GUI and run the below to finally run zaproxy on port 8081: # ./zap.sh -config connection.timeoutInSecs=3000 -config proxy.port=8081 # Go to zaproxy menu export the Root CA certificate using "Tools | Options | Dynamic SSL Certificates | Save" # To use the proxy in chrome use the below: # google-chrome --proxy-server="http://localhost:8081" # Go to chrome settings search for "certificate", click "Mamage Certificates | Authorities | Import | All Files"; select the exported cer file and select "trust his certificate for identifying websites" # To not use the proxy in chrome use the below: # google-chrome --no-proxy-server - If you want to start the proxy with a user interface so you can look into the history of found vulnerabilities through a nice UI and assuming you installed it from the recipe then run it as '/opt/zap/zap.sh' or if you get issues with your display like it happened to me while using xrdp with 'ssh -X localhost /opt/zap/zap.sh'.

-

In order to proxy all requests from chrome we need to follow the below steps.

- From zap proxy menu export the Root CA certificate using "Tools | Options | Dynamic SSL Certificates | Save"

- From Chrome settings search for "certificate", click "Manage Certificates | Authorities | Import | All Files"; select the exported cer file and select "trust his certificate for identifying websites"

- Your E2E runner must be started after you run the below commands because the browser should be started after these commands in order to use the proxy.

export http_proxy=localhost:8080 export https_proxy=localhost:8080

- To stop the proxy and resume E2E without it, we just need to reset the two variables and restart the E2E runner.

LEGACY 12.04: For the proxy to get the traffic from chrome you need to configure the ubuntu system proxy with the commands below. All traffic will now go through the zaproxy. If you want to turn the proxy off just run the first command. To turn it on run just the second but run them all if you are unsure about the current configuration. This is a machine where you just run automated tests so it is expected that you run no browser manually there BTW and that is the reason I forward all the http traffic through the proxy:This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters. Learn more about bidirectional Unicode charactersgsettings set org.gnome.system.proxy mode 'none' gsettings set org.gnome.system.proxy mode 'manual' gsettings set org.gnome.system.proxy.http port 8080 gsettings set org.gnome.system.proxy.http host 0.0.0.0 gsettings set org.gnome.system.proxy.https port 8080 gsettings set org.gnome.system.proxy.https host 0.0.0.0 gsettings set org.gnome.system.proxy ignore-hosts "['']" - Every time your tests run you will be collecting data about possible vulnerabilities

- You could go further now and inspect the zaproxy results via the REST API consuming JSON or XML in your CI pipeline in fact stopping whole deployments from happening. You can take a less radical approach and get the information in plain HTML. Below for example we extract all the alerts in HTML obtained while passively browsing http://google.com. It is assumed that you have run '/opt/zap/zap.sh -daemon' which allows to access from http:/zap base URL the REST API:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters. Learn more about bidirectional Unicode characters

# use JSON(default), XML or HTM http://zap/HTML/core/view/alerts/?zapapiformat=HTML&baseurl=http%3A%2F%2Fgoogle.com - If you want to access this API from outside the box you will need to run '/opt/zap/zap.sh -daemon -host 0.0.0.0 -p 8081' however keep in mind the poor security available for this api.

- Do not forget to restart the proxy after vulnerabilities are fixed or stop and start it automatically before the tests are run so you effectively collect the latest vulnerabilities only

- Your CI might have a plugin for active scanning (See https://wiki.jenkins-ci.org/display/JENKINS/Zapper+Plugin) or not. Starting an active scanning automatically should be easy.

Congratulations. You have just added continuous web application security to your SDLC.

How to parse any valid date format in Talend?

How to parse any valid date format in Talend? This is a question that comes up every so often and the simple answer is that there is not plain simple way that will work for everybody. Valid dates format depend on locale and if you are in the middle of a project supporting multiple countries and languages YMMV.

You will need to use Talend routines. For example you can create a stringToDate() custom method. In its simpler form (considering you are tackling just one locale) you will pass just a String a parameter (not the locale). You will need to add the formats you will allow like you see in the function below. The class and the main method are just for testing purposes and you can see it in action here. These days is so much easier to share snippets of code that actually run ;-)

You will need to use Talend routines. For example you can create a stringToDate() custom method. In its simpler form (considering you are tackling just one locale) you will pass just a String a parameter (not the locale). You will need to add the formats you will allow like you see in the function below. The class and the main method are just for testing purposes and you can see it in action here. These days is so much easier to share snippets of code that actually run ;-)

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| /** | |

| * | |

| * See it in action in http://runnable.com/VIHI8AhI-_MpHcsM/multipledatesparser-java | |

| * | |

| * */ | |

| class MultipleDatesParser { | |

| public static String stringToDate (String dateToParse) throws java.lang.Exception { | |

| String parsedDate = null; | |

| java.text.SimpleDateFormat sdf = null; | |

| try { | |

| sdf = new java.text.SimpleDateFormat("MM/DD/yy"); | |

| parsedDate = sdf.parse(dateToParse).toString(); | |

| } catch (Exception e1) { | |

| try { | |

| sdf = new java.text.SimpleDateFormat("MM/DD/yyyy"); | |

| parsedDate = sdf.parse(dateToParse).toString(); | |

| } catch (Exception e2) { | |

| //Other data formats here following the pattern above or ... | |

| throw e2; | |

| } | |

| } | |

| return parsedDate; | |

| } | |

| public static void main (String[] args) throws java.lang.Exception { | |

| System.out.println(stringToDate("12/20/2014")); | |

| System.out.println(stringToDate("12/20/14")); | |

| } | |

| } |

Wednesday, December 03, 2014

Are your web security scanners good enough?

Are your web security scanners good enough? Note that use plural here as there is no silver bullet. There is no such thing as the perfect security tool.

More than two years ago I posted a self starting guide to get into penetration testing which brought some interest for some talks, consultancy hours and good friends. Not much have been changed until last month when in the Google Security Blog we learned that a new tool called Firing Range was been open sourced. I said to myself "finally we have a test bed for web application security scanners" and then the next question immediately popped up "Are the web security scanners I normally use good enough at detecting these well known vulnerabilities?". I would definitely like to get feedback private or public about tool results. For now I have asked 4 different open sourced tools about the plans to enhance their scanners so they can detect vulnerabilities like the ones Firing Range exposes. My tests so far are telling me that I need to look for other scanners as these 4 do not detect the exposed vulnerabilities. I have posted a comment to the Google post but it has not been authorized so far. I was after responding the main question in this post but then I realized that probably if everyone out there run their tests against their tools (free or paid) we could gather some information about those that are doing a better job as we speak in terms of finding Firing Range vulnerabilities. Here is the list of my questions so far:

More than two years ago I posted a self starting guide to get into penetration testing which brought some interest for some talks, consultancy hours and good friends. Not much have been changed until last month when in the Google Security Blog we learned that a new tool called Firing Range was been open sourced. I said to myself "finally we have a test bed for web application security scanners" and then the next question immediately popped up "Are the web security scanners I normally use good enough at detecting these well known vulnerabilities?". I would definitely like to get feedback private or public about tool results. For now I have asked 4 different open sourced tools about the plans to enhance their scanners so they can detect vulnerabilities like the ones Firing Range exposes. My tests so far are telling me that I need to look for other scanners as these 4 do not detect the exposed vulnerabilities. I have posted a comment to the Google post but it has not been authorized so far. I was after responding the main question in this post but then I realized that probably if everyone out there run their tests against their tools (free or paid) we could gather some information about those that are doing a better job as we speak in terms of finding Firing Range vulnerabilities. Here is the list of my questions so far:

- Can anybody share results (bad or good) about web application scanners running against Firing Range?

- Can anybody share other test bed softwares (similar to Firing Range) they are currently using, perhaps a cool honey pot for other to further test scanners?

- Skipfish: https://code.google.com/p/skipfish/issues/detail?id=209

- Nikto: I is a Web Server Scanner and not a Web Application Scanner https://github.com/sullo/nikto/issues/191

- w3af: https://github.com/andresriancho/w3af/issues/6451

- ZAP: https://code.google.com/p/zaproxy/issues/detail?id=1422

Labels:

csrf,

firing range,

nikto,

owasp,

pentest,

scanner,

security,

skipfish,

vulnerability,

w3af,

web,

xss,

zap

Wednesday, November 26, 2014

Error level or priority for Linux syslog

If you grep your linux server logs from time to time you might be surprised at the lack of an error level. If you want to know for example all error logs currently in syslog, how would you go around it? Simple answer you cannot without changing the log format in /etc/syslog.conf.

Let us say you configured to see the priority (%syslogpriority%) as the first character in the log file:

Here is how to force an alert from the cron facility for testing purposes:

Let us say you configured to see the priority (%syslogpriority%) as the first character in the log file:

$ vi /etc/rsyslog.conf ... # # Use traditional timestamp format. # To enable high precision timestamps, comment out the following line. # $template custom,"%syslogpriority%,%syslogfacility%,%timegenerated%,%HOSTNAME%,%syslogtag%,%msg%\n" $ActionFileDefaultTemplate custom ... $ sudo service rsyslog restartTo filter information look at the description of priorities. From http://www.rsyslog.com/doc/queues.html:

Numerical Severity

Code

0 Emergency: system is unusable

1 Alert: action must be taken immediately

2 Critical: critical conditions

3 Error: error conditions

4 Warning: warning conditions

5 Notice: normal but significant condition

6 Informational: informational messages

7 Debug: debug-level messages

A simple grep helps us now:

$ grep '^[0-3]' /var/log/syslog ... 3,3,Nov 26 11:56:15,myserver,monit[17496]:, 'myserver' mem usage of 96.3% matches resource limit [mem usage>80.0%] ...A more readable format:

$ vi /etc/rsyslog.conf ... # # Use traditional timestamp format. # To enable high precision timestamps, comment out the following line. # $template TraditionalFormatWithPRI,"%pri-text%: %timegenerated% %HOSTNAME% %syslogtag%%msg:::drop-last-lf%\n" $ActionFileDefaultTemplate TraditionalFormatWithPRI ... $ sudo service rsyslog restartWould allow you to search as well:

$ grep -E '\.error|\.err|\.crit|\.alert|\.emerg|\.panic' /var/log/syslog ... daemon.err<27>: Nov 26 13:10:18 myserver monit[17496]: 'myserver' mem usage of 95.9% matches resource limit [mem usage>80.0%]From http://www.rsyslog.com/doc/rsyslog_conf_filter.html valid values are debug, info, notice, warning, warn (same as warning), err, error (same as err), crit, alert, emerg, panic (same as emerg).

Here is how to force an alert from the cron facility for testing purposes:

logger -p "cron.alert" "This is a test alert that should be identified by logMonitor"You will get:

cron.alert<73>: Aug 27 08:42:14 sftp krfsadmin: This is a test alert that should be identified by logMOnitorWhich you could inspect with logMonitor.

Monday, November 24, 2014

Being an Effective Manager means being an Effective Coach

Being an Effective Manager means being an Effective Coach. A coach needs to know very well each client. They will all be different, they will have different objectives, they will be able to achieve different goals. However all of clients must be willing to be trained and coached. The coach bases his individual plan on the client existing and needed skills, the ability to perform based on personal goals and the personal will of the individual to succeed. The relationship is bidirectional though, if the will is poor on any side, and/or the ability does not match expected goals, and/or if the skills does not match expected level then the client and the coach are not a good fit for each other.

Management is not any different. Each person is valuable one way or another but nobody is a good match for all type of jobs. The will is necessary, no brainer. As team member is expected to be driven by a will to contribute to the culture, value and profit of the whole group. Skill and Ability are a different story.

The difference between skill and ability is very subtle. I tend to think that a team member has an ability problem when all the manager resources to improve the skills of such "direct" have been tried without success. Of course the development of skills and ability of the "direct" will be affected by the skills and ability of the manager. So then how can we be effective managers?

To be effective managers we need to know each of our directs. They are all different and so in order to set them all for the biggest possible success we need to work in a personalized way with them. I think Managers have a lot to learn from the Montessori school. This is a daily task, if you are or want to be a manager you have to love teaching and caring for others successes.

Management is not any different. Each person is valuable one way or another but nobody is a good match for all type of jobs. The will is necessary, no brainer. As team member is expected to be driven by a will to contribute to the culture, value and profit of the whole group. Skill and Ability are a different story.

The difference between skill and ability is very subtle. I tend to think that a team member has an ability problem when all the manager resources to improve the skills of such "direct" have been tried without success. Of course the development of skills and ability of the "direct" will be affected by the skills and ability of the manager. So then how can we be effective managers?

To be effective managers we need to know each of our directs. They are all different and so in order to set them all for the biggest possible success we need to work in a personalized way with them. I think Managers have a lot to learn from the Montessori school. This is a daily task, if you are or want to be a manager you have to love teaching and caring for others successes.

Java NoSuchAlgorithmException - SunJSSE, sun.security.ssl.SSLContextImpl$DefaultSSLContext

This is one of those misleading issues coming from a log trace which does not tell you the real cause. Most likely if you scroll up in the log file you will find something like:

Caused by: java.security.NoSuchAlgorithmException: Error constructing implementation (algorithm: Default, provider: SunJSSE, class: ... Caused by: java.security.PrivilegedActionException: java.io.FileNotFoundException: /opt/tomcat/certs/customcert.p12 (No such file or directory) ...As a general rule restart the jvm and pay attention to absolutely all errors ;-)

Wednesday, November 19, 2014

Mount CIFS, SAMBA, SMB in MAC OS X

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| sudo mount_smbfs '//domain;user@cifsserver.domain/path/to/share/' /local/path/to/mounting/point |

Wednesday, November 12, 2014

javax.script.ScriptException: sun.org.mozilla.javascript.EvaluatorException: Encountered code generation error while compiling script: generated bytecode for method exceeds 64K limit

We got this error after upgrading libreoffice as it replaced the symlink "/usr/bin/java -> /etc/alternatives/java" which was still java 6. This issue was corrected in Java 7 so replacing the symlink with the hotspot jdk7 or up should resolve the problem.

Tuesday, November 11, 2014

Libreoffice hanging in headless mode unless using root user

A simple 'libreoffice --headless --help' hanging? Be sure ~/.config exists and its content is owned by the current user:

mkdir -p ~/.config chown -R `logname`:`logname` ~/.configThe above did not work in Ubuntu 12.04 which runs version 3.5. However you can install in 12.04 the same version run in 14.04:

sudo add-apt-repository -y ppa:libreoffice/libreoffice-4-2 && sudo apt-get update && sudo apt-get install libreoffice && libreoffice --versionIn our case there were zillions of these processes stuck in the background BTW so we had to kill them manually:

pkill libreoffice pkill oosplash pkill soffice.bin

Monday, November 03, 2014

The path '.' appears to be part of a Subversion 1.7 or greater

Subversion asking for an upgrade for example after command 'svn status -u' ?

svn: The path '.' appears to be part of a Subversion 1.7 or greater working copy. Please upgrade your Subversion client to use this working copy.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| sudo add-apt-repository -y ppa:svn/ppa | |

| sudo apt-get update | |

| sudo apt-get upgrade | |

| sudo apt-get install subversion |

Friday, October 31, 2014

Thread safe concurrent svn update

Subversion (svn) update is not thread safe which means you cannot script an update that could interfere with some other update process or otherwise you will face:

svn: Working copy '.' locked svn: run 'svn cleanup' to remove locks (type 'svn help cleanup' for details)

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # How to test this is a valid solution? | |

| # 1. Run 'while true; do svn update; done' in one SSH session | |

| # 2. Run the below 'while' in a separate SSH session until you see the echo message indicating that there is locking | |

| set +e | |

| while svn update 2>&1 | grep "Working copy.*locked"; do echo "Retrying 'svn update' after in locked working copy"; sleep 5; done | |

| set -e |

Thursday, October 30, 2014

Kanban as a driver for continuous delivery

Our Kanban journey so far has been a rewarding experience. You can check my presentation on Continuous delivery to learn why and vote for yet another presentation on the subject in the upcoming 2014 ITPalooza event. The more likes I get the better chances I have to win a spot for the presentation.

Tuesday, October 28, 2014

On security: Avoid weak SSL v3

SSL v3 is a weak protocol we all use without noticing when we access anything “secure” on the web including native applications in our phones.

Applications providers should remove support for it and users/help desk personnel should update browsers. Failure to do this will add to chances to get any of your online accounts compromised.

What steps should you follow to protect yourself?

Applications providers should remove support for it and users/help desk personnel should update browsers. Failure to do this will add to chances to get any of your online accounts compromised.

What steps should you follow to protect yourself?

- Go to https://dev.ssllabs.com/ssltest/viewMyClient.html to understand if your browser is secure

- If you get a message like "Your user agent is vulnerable. You should disable SSL 3.” then follow the instructions from https://zmap.io/sslv3/browsers.html

Monday, October 27, 2014

On security: Test if your site is still using weak SHA1 from command line

Security wise you should check if your website is still using the weak SHA1 algorithm to sign your domain certificate. Marketing wise as well. With Chrome being one of the major web browsers in use out there your users will feel insecure very soon in your website unless you sign your certificate with the sha256 hash algorithm.

Google has announced Chrome will start warning users who try to visit websites that still use sha1 signature algorithm to generate their SSL certificates.

You can of course use https://www.ssllabs.com/ssltest/analyze.html?d=$domain to test those sites available to the wild. For intranet though you need a different tool which happens to work of course also for external sites:

Google has announced Chrome will start warning users who try to visit websites that still use sha1 signature algorithm to generate their SSL certificates.

You can of course use https://www.ssllabs.com/ssltest/analyze.html?d=$domain to test those sites available to the wild. For intranet though you need a different tool which happens to work of course also for external sites:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # You should get sha256WithRSAEncryption below. If you get sha1WithRSAEncryption chrome will warn users when visiting $domain | |

| echo | openssl s_client -connect $site:443 2>/dev/null | openssl x509 -text -noout | grep "Signature Algorithm" |

Saturday, October 25, 2014

Measure throughput via iperf

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # REMOTE: considering ubuntu | |

| sudo apt-get install iperf | |

| iperf -s | |

| # LOCAL: condiderng OSX | |

| brew install iperf | |

| iperf -c REMOTE |

Wednesday, October 22, 2014

On security Automate sftp when public key authentication is not available

The real question is why public key authentication is not available. Storing passwords and maintaining them secure is a difficult task specially when those are supposed to be used from automated code.

For some reason you still find servers and clients (which we do not control) that accept only passwords for authentication. My advice is educate but in many cases you simply are out of business if you do not "comply". Interesting ...

If you must connect using password then the below should help. Suppose you have a batch file with sftp commands for example a simple dir command (and others). You can send those to the lftp command:

Use this at your own risk. Do not use it before communicating the risks.

For some reason you still find servers and clients (which we do not control) that accept only passwords for authentication. My advice is educate but in many cases you simply are out of business if you do not "comply". Interesting ...

If you must connect using password then the below should help. Suppose you have a batch file with sftp commands for example a simple dir command (and others). You can send those to the lftp command:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # INSECURE!!! | |

| $ echo dir > /tmp/batchfile.sftp | |

| $ cat /tmp/batchfile.sftp | lftp -u $user,$password sftp://$domain |

Wednesday, October 08, 2014

svn: E175013: Access to '/some/dir' forbidden

The below error would look like lack of permissions however permissions hadn't change neither the user desktop environment where credentials were saved:

svn: E175013: Access to '/some/dir' forbiddenLooking inside "auth/svn.simple/*" I found a password that I tried but did not work. Password was incorrect and the easiest way to correct the situation is to force to connect with the user again, password will be prompted and after supplying it the svn.simple/* file will get updated:

svn --username myuser mkdir http......

Monday, October 06, 2014

Solaris 11 monit: cannot read status from the monit daemon

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # Solaris 11 where we use inittab: | |

| $ sudo monit summary | |

| monit: cannot read status from the monit daemon | |

| # This was resolved by issuing the below commands: | |

| $ sudo monit quit | |

| $ sudo init q | |

| $ sudo monit summary |

Thursday, September 25, 2014

OPenVPN Client for Linux ( No GUI needed )

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| ### Setup: Assuming the ovpn file is named client.ovpn and it is located in the root dir: | |

| export HISTSIZE=0 | |

| user='user here' | |

| pass='pass here' | |

| sudo chmod 600 /client.ovpn | |

| echo $user > /tmp/up | |

| echo $pass >> /tmp/up | |

| sudo mv /tmp/up / | |

| sudo chmod 600 /up | |

| export HISTSIZE=1000 | |

| ### Run client: | |

| sudo openvpn --config /client.ovpn --auth-user-pass /up |

Thursday, September 11, 2014

Microphone not working in Windows 7 guest running on Virtualbox (v4.3.16) from a MAC OS X (Mavericks) host?

Microphone not working in Windows 7 guest running on Virtualbox (v4.3.16) from a MAC OS X (Mavericks) host?

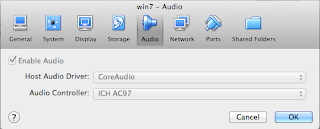

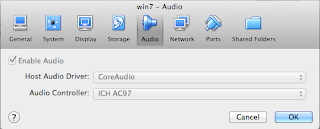

The very first thing you should do is to change the Audio Controller in VirtualBox. Below is a setting that worked for me:

Windows will complain about not finding a suitable audio driver but if you know that all you need is to install a "Realtek AC'97 Driver" for "Windows 7" then you will find http://www.ac97audiodriver.com

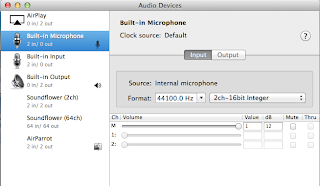

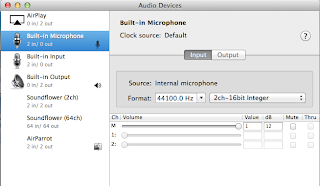

After installing the driver followed by a Windows restart you should be able to setup the microphone using the "configure/setup microphone wizard" options. If you get into troubles make sure "properties/advanced" show as default format "2 channels, 16 bit, 44100 Hz (cd quality)" as shown below:

I have read on the web the suggestion that this setting should match the host settings which you can set from the "Audio MIDI Setup" application. I have tried different combinations and it still works, the important thing is to rerun the setup microphone wizard to make sure distortion is kept to a minimum. In any case I left mine with the below settings:

The very first thing you should do is to change the Audio Controller in VirtualBox. Below is a setting that worked for me:

Windows will complain about not finding a suitable audio driver but if you know that all you need is to install a "Realtek AC'97 Driver" for "Windows 7" then you will find http://www.ac97audiodriver.com

After installing the driver followed by a Windows restart you should be able to setup the microphone using the "configure/setup microphone wizard" options. If you get into troubles make sure "properties/advanced" show as default format "2 channels, 16 bit, 44100 Hz (cd quality)" as shown below:

I have read on the web the suggestion that this setting should match the host settings which you can set from the "Audio MIDI Setup" application. I have tried different combinations and it still works, the important thing is to rerun the setup microphone wizard to make sure distortion is kept to a minimum. In any case I left mine with the below settings:

Wednesday, September 10, 2014

Is your team continuously improving? Would you share your Agile / Lean Journey? Bring the JIT to the Software Industry

Is your team continuously improving? Would you share your Agile / Lean Journey?. I hope that my presentation on Continuous delivery will invite others to help bringing to the Software Industry the Just In Time mentality.

Thursday, August 28, 2014

Can we apply the 80-20 rule to find out the Minimal Marketable Feature?

Cam we apply the 80-20 rule ( "for many events, roughly 80% of the effects come from 20% of the causes" ) to find out the Minimal Marketable Feature (MMF)?

In my personal experience over bloated requirements as the norm. So I have the practice (even in my personal life) to analyze the root cause of problems to try to resolve as much as I can with the minimum possible effort. The 80-20 rule becomes my target and unless someone before me actually applied it I can say that in most cases at least I can present an option. Whether that is accepted or not depends on many other factors which I rather not discuss here.

Why this rule is useful in Software development is a well known subject. But making the whole team aware of it comes in handy when there is clear determination to be occupied 100% of the tine in producing value. If we can cut down to 20% the apparently needed requirements our productivity would literally skyrocket. Of course the earlier you do this analysis the better but be prepared because perhaps you, the software engineer will teach a lesson to everybody above when you demonstrate that what was asked is way more than what is needed to resolve the real need of the stakeholder.

In my personal experience over bloated requirements as the norm. So I have the practice (even in my personal life) to analyze the root cause of problems to try to resolve as much as I can with the minimum possible effort. The 80-20 rule becomes my target and unless someone before me actually applied it I can say that in most cases at least I can present an option. Whether that is accepted or not depends on many other factors which I rather not discuss here.

Why this rule is useful in Software development is a well known subject. But making the whole team aware of it comes in handy when there is clear determination to be occupied 100% of the tine in producing value. If we can cut down to 20% the apparently needed requirements our productivity would literally skyrocket. Of course the earlier you do this analysis the better but be prepared because perhaps you, the software engineer will teach a lesson to everybody above when you demonstrate that what was asked is way more than what is needed to resolve the real need of the stakeholder.

Monday, August 25, 2014

We are hiring an experienced Javascript developer who has worked on angularjs for a while

If you have built directives to the point of having configurable widgets using AngularJS and you are interested in working in an agile lean environment where people come first please apply here.

Saturday, August 23, 2014

How to eliminate the waste associated to prioritization and estimation activities?

How to eliminate the waste associated to prioritization and estimation activities?

- 5 minutes per participant meeting: Business stakeholders periodically (depending on how often there are slots available from the IT team) sit to discuss a list of features they need. This list must be short and to ensure that it is stakeholders come with just one feature per participant (if the number of slots is bigger than the number of participants then adjust the number of features by participant). Each feature must be presented with the numeric impact for business ( expected return in terms of hours saved, dollars saved, dollars earned, client acquisition and retention, etc ) and a concrete acceptance criteria ( a feature must be testable and the resulting quality must be measurable ). Each participant is entitled to use 5 minutes maximum to present his/her feature. Not all participants will make a presentation. Sometimes the very first participant shows a big saving idea that nobody else can compete with and the meeting is finalized immediately. That is the idea which should be passed to the IT team.

- The IT team does a Business Analysis, an eliciting of requirements. The responsible IT person (let us call that person Business Analyst or BA) divides the idea implementation in the smallest possible pieces that will provide value after an isolated deployment, no bells and whistles. In other words divide in Minimal Marketable Features (MMF)

- The BA shares with the IT development team the top idea and the breakdown.

- IT engineers READ the proposal and tag each piece with 1 of a limited number of selections from the Fibonacci sequence representing the time in hours (0 1 2 3 5 8 13 21 34 55 89). Hopefully there is nothing beyond 21 and ideally everything should be less than 8 hours (an MMF per day!!!)

- BA informs Business and gets approval for the MMFs. Note how business can now discard some bells and whistles when they realize few MMFs will provide the same ROI. Ideally the BA actively pushes to discard functionality that is expensive without actually bringing back a substantial gain.

- Developers deliver the MMFs and create new slots for the cycle to start all over again.

Wednesday, August 20, 2014

This is a Summary of my posts related to Agile and Lean Project Management

This is a Summary of my posts related to Agile and Lean Project Management which I will try to maintain down the road. I hope it will allow me to share with others who try everyday to lead their teams on the difficult path to achieve a constant pace, predictable and high quality delivery.

http://thinkinginsoftware.blogspot.com/2014/11/being-effective-manager-means-being.html

http://thinkinginsoftware.blogspot.com/2014/10/kanban-as-driver-for-continuous-delivery.html

http://thinkinginsoftware.blogspot.com/2014/08/can-we-apply-80-20-rule-to-find-out.html

http://thinkinginsoftware.blogspot.com/2014/08/how-to-eliminate-waste-associated-to.html

http://thinkinginsoftware.blogspot.com/2014/08/define-pm-in-three-words-predictable.html

http://thinkinginsoftware.blogspot.com/2014/08/speaking-about-software-productivity.html

http://thinkinginsoftware.blogspot.com/2014/08/does-project-management-provides.html

http://thinkinginsoftware.blogspot.com/2014/06/on-productivity-what-versus-how-defines.html

http://thinkinginsoftware.blogspot.com/2014/06/continuous-delivery-must-not-affect.html

http://thinkinginsoftware.blogspot.com/2014/05/continuous-delivery-needs-faster-server.html

http://thinkinginsoftware.blogspot.com/2014/05/is-tdd-dead.html

http://thinkinginsoftware.blogspot.com/2014/04/small-and-medium-tests-should-never.html

http://thinkinginsoftware.blogspot.com/2014/04/continuous-integration-ci-makes.html

http://thinkinginsoftware.blogspot.com/2014/04/agile-interdependence-as-software.html

http://thinkinginsoftware.blogspot.com/2014/04/have-product-vision-before-blaming-it.html

http://thinkinginsoftware.blogspot.com/2014/04/non-functional-requirements-should-be.html

http://thinkinginsoftware.blogspot.com/2014/04/on-agile-minimum-marketable-feature-mmf.html

http://thinkinginsoftware.blogspot.com/2014/04/personal-wip-limit-directly-impacts.html

http://thinkinginsoftware.blogspot.com/2014/04/having-exploratory-meeting-ask-how-will.html

http://thinkinginsoftware.blogspot.com/2014/04/test-coverage-is-secondary-to-defect.html

http://thinkinginsoftware.blogspot.com/2014/04/continuous-improvement-for-people.html

http://thinkinginsoftware.blogspot.com/2014/04/are-you-developing-product-or.html

http://thinkinginsoftware.blogspot.com/2014/04/got-continuous-product-delivery-then.html

http://thinkinginsoftware.blogspot.com/2014/04/lean-is-clean-without-c-for-complexity.html

http://thinkinginsoftware.blogspot.com/2014/03/on-lean-thinking-continuous-delivery.html

http://thinkinginsoftware.blogspot.com/2014/02/wip-limit-versus-batch-size-fair.html

http://thinkinginsoftware.blogspot.com/2014/01/is-kanban-used-as-buzz-word.html

http://thinkinginsoftware.blogspot.com/2014/01/kanban-wip-limit-how-to.html

http://thinkinginsoftware.blogspot.com/2013/12/kanban-prioritization-estimation.html

http://thinkinginsoftware.blogspot.com/2013/11/kanban-show-stale-issues-to-reach.html

http://thinkinginsoftware.blogspot.com/2013/07/agile-team-did-you-already-script-your.html

http://thinkinginsoftware.blogspot.com/2013/04/it-agile-and-lean-hiring.html

http://thinkinginsoftware.blogspot.com/2013/02/the-car-factory-software-shop-and-la.html

http://thinkinginsoftware.blogspot.com/2014/11/being-effective-manager-means-being.html

http://thinkinginsoftware.blogspot.com/2014/10/kanban-as-driver-for-continuous-delivery.html

http://thinkinginsoftware.blogspot.com/2014/08/can-we-apply-80-20-rule-to-find-out.html

http://thinkinginsoftware.blogspot.com/2014/08/how-to-eliminate-waste-associated-to.html

http://thinkinginsoftware.blogspot.com/2014/08/define-pm-in-three-words-predictable.html

http://thinkinginsoftware.blogspot.com/2014/08/speaking-about-software-productivity.html

http://thinkinginsoftware.blogspot.com/2014/08/does-project-management-provides.html

http://thinkinginsoftware.blogspot.com/2014/06/on-productivity-what-versus-how-defines.html

http://thinkinginsoftware.blogspot.com/2014/06/continuous-delivery-must-not-affect.html

http://thinkinginsoftware.blogspot.com/2014/05/continuous-delivery-needs-faster-server.html

http://thinkinginsoftware.blogspot.com/2014/05/is-tdd-dead.html

http://thinkinginsoftware.blogspot.com/2014/04/small-and-medium-tests-should-never.html

http://thinkinginsoftware.blogspot.com/2014/04/continuous-integration-ci-makes.html

http://thinkinginsoftware.blogspot.com/2014/04/agile-interdependence-as-software.html

http://thinkinginsoftware.blogspot.com/2014/04/have-product-vision-before-blaming-it.html

http://thinkinginsoftware.blogspot.com/2014/04/non-functional-requirements-should-be.html

http://thinkinginsoftware.blogspot.com/2014/04/on-agile-minimum-marketable-feature-mmf.html

http://thinkinginsoftware.blogspot.com/2014/04/personal-wip-limit-directly-impacts.html

http://thinkinginsoftware.blogspot.com/2014/04/having-exploratory-meeting-ask-how-will.html

http://thinkinginsoftware.blogspot.com/2014/04/test-coverage-is-secondary-to-defect.html

http://thinkinginsoftware.blogspot.com/2014/04/continuous-improvement-for-people.html

http://thinkinginsoftware.blogspot.com/2014/04/are-you-developing-product-or.html

http://thinkinginsoftware.blogspot.com/2014/04/got-continuous-product-delivery-then.html

http://thinkinginsoftware.blogspot.com/2014/04/lean-is-clean-without-c-for-complexity.html

http://thinkinginsoftware.blogspot.com/2014/03/on-lean-thinking-continuous-delivery.html

http://thinkinginsoftware.blogspot.com/2014/02/wip-limit-versus-batch-size-fair.html

http://thinkinginsoftware.blogspot.com/2014/01/is-kanban-used-as-buzz-word.html

http://thinkinginsoftware.blogspot.com/2014/01/kanban-wip-limit-how-to.html

http://thinkinginsoftware.blogspot.com/2013/12/kanban-prioritization-estimation.html

http://thinkinginsoftware.blogspot.com/2013/11/kanban-show-stale-issues-to-reach.html

http://thinkinginsoftware.blogspot.com/2013/07/agile-team-did-you-already-script-your.html

http://thinkinginsoftware.blogspot.com/2013/04/it-agile-and-lean-hiring.html

http://thinkinginsoftware.blogspot.com/2013/02/the-car-factory-software-shop-and-la.html

Solaris calculating date difference

It is common necessity to know how long our script takes. In Solaris 11 just as any linux system the below will work:

However in Solaris 10 and below it won't and so a hack will be needed.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| $ START=$(date +%s) | |

| $ END=$(date +%s) | |

| $ DIFF=$(( $END - $START )) | |

| $ echo $START | |

| 1408567010 | |

| $ echo $END | |

| 1408567017 | |

| $ echo echo "Finished in $DIFF seconds" | |

| Finished in 7 seconds |

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| $ date +%s | |

| %s | |

| $ START=$(/usr/bin/truss /usr/bin/date 2>&1 | nawk -F= '/^time\(\)/ {gsub(/ /,"",$2);print $2}') | |

| $ END=$(/usr/bin/truss /usr/bin/date 2>&1 | nawk -F= '/^time\(\)/ {gsub(/ /,"",$2);print $2}') | |

| $ let DIFF=$(( $END - $START )) | |

| $ echo $END | |

| 1408566266 | |

| $ echo $START | |

| 1408566245 | |

| $ echo echo "Finished in $DIFF seconds" | |

| Finished in 21 seconds |

Monday, August 18, 2014

Define PM in three words: Predictable Quality Delivery

Define PM in three words: Predictable Quality Delivery.

I can't help to look at PM from the Product Management angle rather than from the Project Management angle. The three constraints (scope, schedule and cost) might be great for building the first version of a product but enhancement, maintenance, the future is a different story. Without a constant pace delivery it will be difficult to remain competitive. That constant pace cannot be supported if quality is not the number one concern in your production lane.

In order to provide value, PM should ensure the team has "hight quality predictable delivery".

I can't help to look at PM from the Product Management angle rather than from the Project Management angle. The three constraints (scope, schedule and cost) might be great for building the first version of a product but enhancement, maintenance, the future is a different story. Without a constant pace delivery it will be difficult to remain competitive. That constant pace cannot be supported if quality is not the number one concern in your production lane.

In order to provide value, PM should ensure the team has "hight quality predictable delivery".

Sunday, August 17, 2014

Speaking about software productivity, does your team write effective code?

Speaking about software productivity, does your team write effective code?

Most people think about efficiency when it comes to productivity. This is only logical as most people think tactically in order to resolve specific problems. These are our "problem solvers". However as a reminder Productivity is not just about Efficiency but firstly about Effectiveness. Thinking strategically *as well* will bring to the team the maximum level of productivity. These, effective programmers are "solutions providers" What can we do to be effective programmers?

Dr. Axel Rauschmayer in his book Speaking Javascript, Chapter 26 explains, IMO, what the effective software development team should do. This applies to any programming language BTW. This is what I take from his statements. This is what I support based on my own experience as a programmer:

Most people think about efficiency when it comes to productivity. This is only logical as most people think tactically in order to resolve specific problems. These are our "problem solvers". However as a reminder Productivity is not just about Efficiency but firstly about Effectiveness. Thinking strategically *as well* will bring to the team the maximum level of productivity. These, effective programmers are "solutions providers" What can we do to be effective programmers?

Dr. Axel Rauschmayer in his book Speaking Javascript, Chapter 26 explains, IMO, what the effective software development team should do. This applies to any programming language BTW. This is what I take from his statements. This is what I support based on my own experience as a programmer:

- Define your code style and follow it. Be consistent.

- Use descriptive and meaningful identifiers: "redBalloon is easier to read than rdBlln"

- Break up long functions/methods into smaller ones. This will make the code *almost* self documented

- Use comments only to complement the code meaning to explain the *why* and not the how

- Use documentation only to complement the comments meaning provide the big picture, how to get started and a glossary of probably unknown terms

- Write the simplest possible code which means code for a sustainable future. In the words of Brian Kernighan "Everyone knows that debugging is twice as hard as writing a program in the first place. So if you are as clever as you can be when you write it, how will you ever debug it?"

Thursday, August 14, 2014

Give current user access to run a script as root

In a one liner:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # Append to a file using sudo to keep this a one liner | |

| sudo echo "`logname` ALL=NOPASSWD:/opt/scripts/foo.sh" | sudo tee -a /etc/sudoers >/dev/null |

Tuesday, August 12, 2014

Install telnet in Solaris 11

Why would you do this? Make sure you communicate the security risk involved to those who asked you to enable it. Here it goes anyway:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| sudo pkg install /service/network/telnet | |

| sudo svcadm enable telnet | |

| svcs |grep telnet |

Friday, August 01, 2014

Does Project Management provide Business Value?

Does Project Management provide Business Value? A similar question came up in LinkedIn and I decided to share my ideas on it.

Project Management is part of any product lifecycle. It is a discipline that should help a team achieve a specific goal. It is needed either as a responsibility of a dedicated individual/department or the whole team.

A team should achieve "predictable delivery with high quality" and for that to happen you will need to measure several productivity KPI. In the words of Joseph E. Stiglitz “What you measure affects what you do,” and “If you don’t measure the right thing, you don’t do the right thing.”.

So IMO if the PM discipline adjust to these ideas PM discipline is to be considered 'an integral part of the overall success of the team'. If these ideas are still not introduced in your team then the PM discipline is a 'must-do' to get you to new levels of productivity. If the PM discipline is thought to be in place but not adjusting to these ideas I would definitely consider it an 'overhead'.

Project Management is part of any product lifecycle. It is a discipline that should help a team achieve a specific goal. It is needed either as a responsibility of a dedicated individual/department or the whole team.

A team should achieve "predictable delivery with high quality" and for that to happen you will need to measure several productivity KPI. In the words of Joseph E. Stiglitz “What you measure affects what you do,” and “If you don’t measure the right thing, you don’t do the right thing.”.

So IMO if the PM discipline adjust to these ideas PM discipline is to be considered 'an integral part of the overall success of the team'. If these ideas are still not introduced in your team then the PM discipline is a 'must-do' to get you to new levels of productivity. If the PM discipline is thought to be in place but not adjusting to these ideas I would definitely consider it an 'overhead'.

Wednesday, July 09, 2014

Solaris remote public key authorization

Still a pain in Solaris 11. Openssh ssh-copy-id still does not work as expected so the process is manual unless you want to risk having multiple keys authorized for the same host remotely.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #1 Generate local key | |

| ssh-keygen -t rsa -N '' -f /export/home/geneva/.ssh/id_rsa | |

| #2 Authorize it remotely: | |

| cat /export/home/geneva/.ssh/id_rsa.pub | ssh $remoteHost 'cat >> .ssh/authorized_keys && echo "Key copied"' | |

| #3 Go to the remote server to eliminate duplicates: | |

| gawk '!x[$0]++' ~/.ssh/authorized_keys > ~/.ssh/authorized_keys2 | |

| mv ~/.ssh/authorized_keys2 ~/.ssh/authorized_keys |

Thursday, July 03, 2014

On SMTP: RCPT To: command verb versus to: header

Ever wondered why you got an email with "to:" being some other address and not yours? Perhaps you got an email stating "to: Undisclosed recipients:;", why? you might have asked.

To simplify the explanation here the RCPT command using the verb “to:” is used to direct the email to a specific address. In the last mile the email will be received by the addressee but only the "to:" header if present will be accessible. If it is missing you get "to: Undisclosed recipients:;" and if it is set you get whatever it says. Clearly you can use a different email address there which will generate in some cases a heck of confusion ;-). You can confirm this yourself just by using telnet as usual for SMTP:

To simplify the explanation here the RCPT command using the verb “to:” is used to direct the email to a specific address. In the last mile the email will be received by the addressee but only the "to:" header if present will be accessible. If it is missing you get "to: Undisclosed recipients:;" and if it is set you get whatever it says. Clearly you can use a different email address there which will generate in some cases a heck of confusion ;-). You can confirm this yourself just by using telnet as usual for SMTP:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # WHEN "RCPT TO:" = "TO:" (to: <me@sample.com>) | |

| MAIL FROM:me@sample.com | |

| 250 2.1.0 Sender OK | |

| RCPT TO:me@sample.com | |

| 250 2.1.5 Recipient OK | |

| DATA | |

| 354 Start mail input; end with <CRLF>.<CRLF> | |

| subject:Using RCPT TO: = to: | |

| to: me@sample.com | |

| # WHEN "RCPT TO:" != "TO:" (to: <who@et.com>) | |

| MAIL FROM:me@sample.com | |

| 250 2.1.0 Sender OK | |

| RCPT TO:me@sample.com | |

| 250 2.1.5 Recipient OK | |

| DATA | |

| 354 Start mail input; end with <CRLF>.<CRLF> | |

| subject:Using RCPT TO: != to: | |

| to: who@et.com | |

| # WHEN "TO:" IS NOT USED (to: Undisclosed recipients:;) | |

| MAIL FROM:me@sample.com | |

| 250 2.1.0 Sender OK | |

| RCPT TO:me@sample.com | |

| 250 2.1.5 Recipient OK | |

| DATA | |

| 354 Start mail input; end with <CRLF>.<CRLF> | |

| subject:Using no to: | |

| . |

Tuesday, July 01, 2014

Variable syntax: cshell is picky. Use braces to refer to variables

If cshell (csh) is your default shell after you login ~/.cshrc will be parsed. If you get the error "variable syntax" your next step if to figure out what shell config file is declaring a variable incorrectly. The below is correct:

Just remove the braces and you will end up with the "variable syntax" error.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # braces are needed to avoid "variable syntax" error | |

| setenv PATH $PATH:/usr/sbin:${GVHOME}/bin:/opt/csw/bin:/usr/sfw/bin:/usr/local/bin |

Friday, June 27, 2014

Solaris pkgutil is not idempotent

Life would be easier if command line tools would never use exit code different than zero unless as a 'real' error pops up. The fact that I am trying to install again an existing package should not result in an 'error' but Solaris returns status 4 when running 'pkg install' with description "No updates necessary for this image.". You have no other option than handling this in a per package basis like I show below using a Plain Old Bash (POB) recipe:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| pkg install libxaw5 || ret=$? | |

| if [ $ret -ne 4 ]; then | |

| echo "$ret is an error exit code. We only skip exit code 4 which means 'no updates necessary'" | |

| exit 1 | |

| fi |

Devops need no words but code: How to forward all Solaris user emails to an external email account

Devops need no words but code.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| smarthost=mail.sample.com | |

| user=me | |

| alertlist=alert@mail.sample.com | |

| /opt/csw/bin/gsed -i "s/^DS[\s]*.*/DS $smarthost/g" /etc/mail/submit.cf | |

| /opt/csw/bin/gsed -i "s/^DS[\s]*.*/DS $smarthost/g" /etc/mail/sendmail.cf | |

| /opt/csw/bin/gsed -i "/$user:/d" /etc/mail/aliases | |

| echo "$user:$alertlist" >> /etc/mail/aliases | |

| /opt/csw/bin/gsed -i "/root:/d" /etc/mail/aliases | |

| echo "root:$alertlist" >> /etc/mail/aliases | |

| newaliases | |

| svcadm restart sendmail | |

| echo "So that we never miss again an important communication from servers" | mailx -s "`hostname` As a sysadmin I want to receive my user mail notifications in my personal email address" me | |

| echo "So that we never miss again an important communication from servers" | mailx -s "`hostname` As a sysadmin I want to receive root mail notifications in my personal email address" root |

Monday, June 23, 2014

Talend tFileExcelInput uses jexcelapi which is deprecated instead of favoring Apache POI

Talend tFileExcelInput uses jexcelapi which is deprecated instead of favoring Apache POI Talend. The alternative seems to be using one of the tFileExcel* components from Talend Exchange or converting Excel to CSV from command line and then processing that file format later on.

Thursday, June 19, 2014

The most efficient way to send emails from Ubuntu shell script or cron

What is the most efficient way to send an email from Ubuntu shell script or cron? I have found sendEmail wins the battle:

sudo apt-get install sendemail echo $CONTENT_BODY | \ sendEmail -f $FROM -t $TO1 $TO2 \ -s $MAIL_SERVER_HOSTNAME -u $SUBJECT > /dev/nullHere is an example of its usage from cron BTW.

How difficult is to report JIRA Worklog?

How difficult is to report JIRA Worklog? There are several plugins and a couple of API call for free but none of them so far can report on a basic metric: How many hours each member of the team worked per ticket in a particular date or date range.

I do not like to go to the database directly but rather I prefer API endpoints, however while I wait for a free solution to this problem I guess the most effective way to pull such information is unfortunately to query the jira database directly.

Below is an example to get the hours per team member and ticket for yesterday. You could tweak this example to get all kind of information about worklog.

If you want to include custom fields like 'Team' see below:

I do not like to go to the database directly but rather I prefer API endpoints, however while I wait for a free solution to this problem I guess the most effective way to pull such information is unfortunately to query the jira database directly.

Below is an example to get the hours per team member and ticket for yesterday. You could tweak this example to get all kind of information about worklog.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # edit through crontab -e | |

| JIRA_PWD=jiraPassword | |

| JIRA_TO1=teammail1@sample.com | |

| JIRA_TO2=teammail2@sample.com | |

| JIRA_FROM=jira@sample.com | |

| MAIL_SERVER=mail.sample.com:25 | |

| 30 08 * * * mysql -vvv -u jira -p$JIRA_PWD jira -e 'select concat(c.pkey, "-", b.issuenum) as issuekey, AUTHOR, issueid, a.STARTDATE, timeworked / 3600 as yesterday_hours, timespent / 3600 as total_hours from worklog a inner join jiraissue b on a.issueid=b.ID inner join project c on b.PROJECT=c.id where date(a.STARTDATE) = date_sub(CURDATE(), interval 1 day) order by AUTHOR, c.pkey, b.issuenum, a.STARTDATE;' | sendEmail -f $JIRA_FROM -t $JIRA_TO1 $JIRA_TO2 -s $MAIL_SERVER -u "JIRA worklog for yesterday" > /dev/null |

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # The below query allows to extract worklog from the previous day in JIRA | |

| # It returns the the JIRA identifier, the author, a custom field called Team, | |

| # the start date, the hours worked yesterday | |

| # and the total hours being worked so far | |

| SELECT Concat(p.pkey, "-", ji.issuenum) AS issuekey, | |

| author, | |

| cfo.customvalue AS Team, | |

| wl.startdate, | |

| timeworked / 3600 AS yesterday_hours, | |

| timespent / 3600 AS total_hours | |

| FROM worklog wl | |

| INNER JOIN jiraissue ji | |